How to Detect OAuth Access Token Theft in Azure

Stealing access tokens to gain access to a user’s account in Azure is a technique that’s been actively used by threat groups over the past few years. I’ve observed this technique in several engagements across the past few years from Chinese APT groups. Generally, this is done through a spear phishing / phishing email with a link that requires a user to grant access to a malicious application through OAuth’s authorization code flow. This enables the attacker-controlled application to access the user’s data.

I’ve broken the blog into two components:

- Attack overview

- Detection methodology

ATTACK OVERVIEW

The attacker will register a malicious application and generate a phishing link in an email that takes a user to a page that looks like the image below. This will generally show information including the app name (in this instance it’s listed as “evilapp”) – whether the app is verified / unverified and then the option to accept or not.

- The user authenticated with the application

- Access token is now returned to the malicious attacker’s application via the re-direct URI

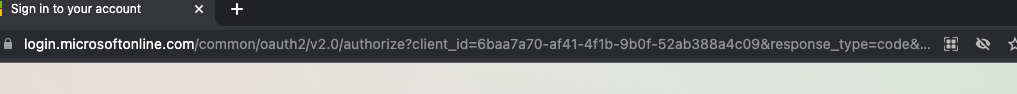

- Client_id: This is the client_id of the malicious application that the attacker has set up in the attacker’s tenant (or it could even be created in YOUR tenant which is more insidious)

- Redirect_URI: This is the URL where the application is being hosted and run from. For this example, I ran this from my localhost hence the URL of “http://127.0.0.1:5000/getAToken”. However, in an attacker instance, this will generally show a malicious domain.

- Scope: These are the API permissions that the attacking application is getting your consent to grant. In this example, only one permission is being requested “User.Read”

Step 2: Review granted permissions

- User.Read – Allows the application to read the profile of a user and basic company information

- User.ReadWrite – Allows the application to read and update user profile information

- User.ReadWrite.All – Read and write to all users full profiles. This can be used to create, delete, and reset user passwords.

- Mail.ReadWrite – Allow application to read and write emails

- Calendars.ReadWrite – Allows the application to read and write to calendar

- Files.ReadWrite – Allows the application ability to read and write files

- User.Export.All – Application can request to export a user’s personal data

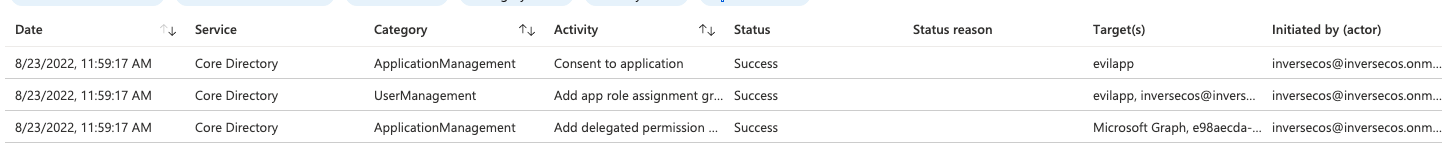

- Category: ApplicationManagement

- Activity: Add delegated permission grant

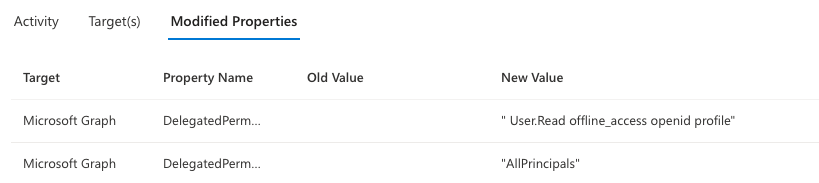

- Target Property Name: Look for the permissions that were granted. In my instance you can see it was the “User.Read” permission.

- Category: UserManagement

- Activity: Add app role assignment grant to user

- Status: success

- ObjectID: <Malicious application Object ID>

- Target Display Name: <Malicious application name>

- Category: ApplicationManagement

- Activity: Consent to application

- Initiated by (actor): Look for the malicious application Object ID

- Target Display Name: Look for the malicious application name

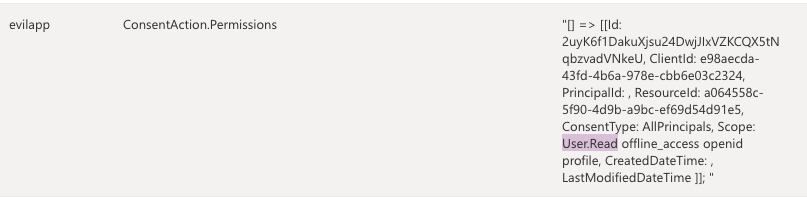

- Modified Properties: Look for the ConsentAction.Permissions and confirm the permissions consented by the user to the malicious application. I’ve highlighted here in purple the permission of “User.Read”.

- User sign-ins (interactive)

- Application: Malicious application name

- User ID: The client ID of the malicious application

Comments

Post a Comment